Every picture posted to Facebook and Instagram gets a caption generated by an image analysis AI, and that AI just got a lot smarter. The improved system should be a treat for visually impaired users, and may help you find your photos faster in the future.

Alt text is a field in an image’s metadata that describes its contents: “A person standing in a field with a horse,” or “a dog on a boat.” This lets the image be understood by people who can’t see it.

These descriptions are often added manually by a photographer or publication, but people uploading photos to social media generally don’t bother, if they even have the option. So the relatively recent ability to automatically generate one — the technology has only just gotten good enough in the last couple years — has been extremely helpful in making social media more accessible in general.

Facebook created its Automatic Alt Text system in 2016, which is eons ago in the field of machine learning. The team has since cooked up many improvements to it, making it faster and more detailed, and the latest update adds an option to generate a more detailed description on demand.

The improved system recognizes 10 times more items and concepts than it did at the start, now around 1,200. And the descriptions include more detail. What was once “Two people by a building” may now be “A selfie of two people by the Eiffel Tower.” (The actual descriptions hedge with “may be…” and will avoid including wild guesses.)

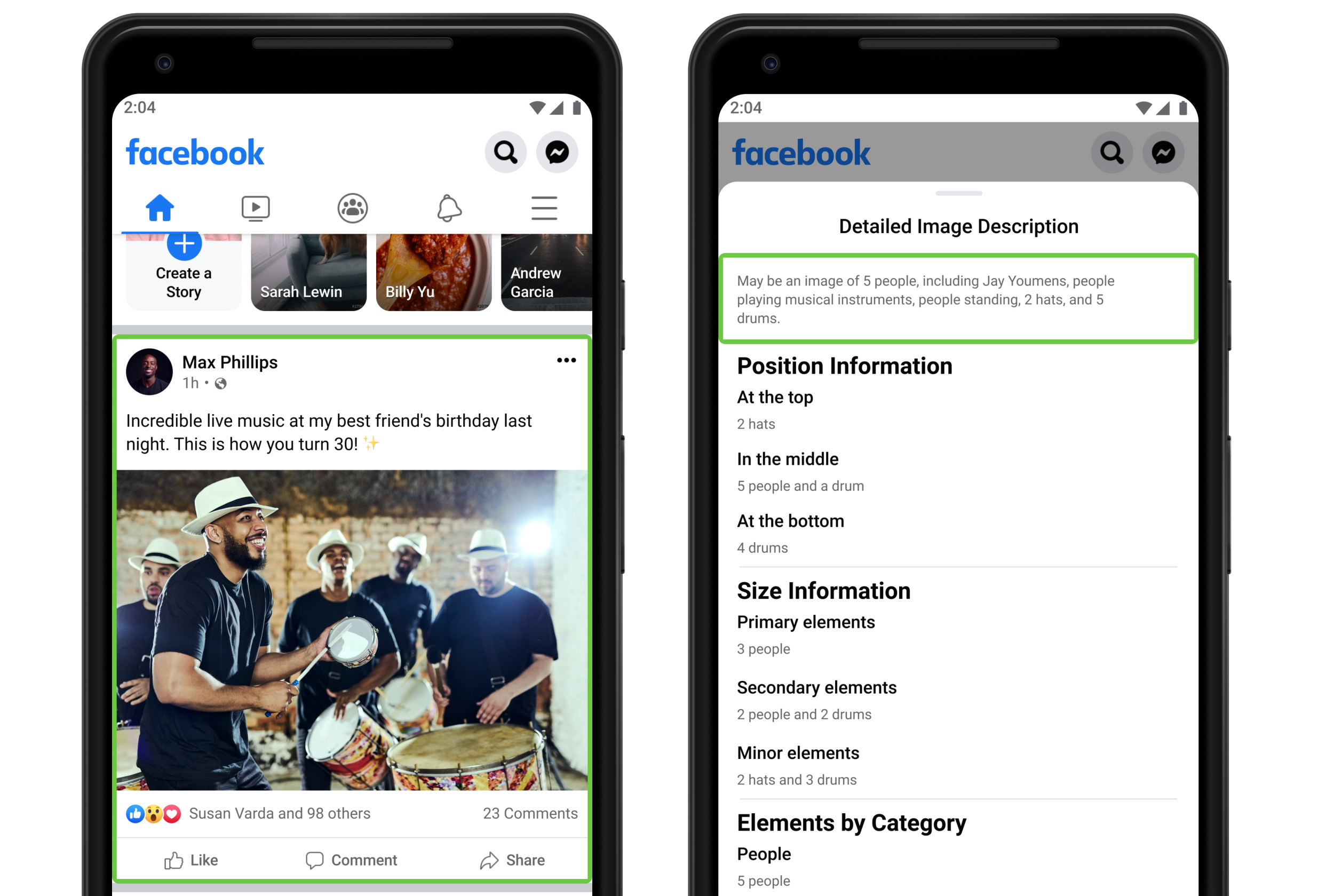

But there’s more detail than that, even if it’s not always relevant. For instance, in this image the AI notes the relative positions of the people and objects:

Obviously the people are above the drums, and the hats are above the people, none of which really needs to be said for someone to get the gist. But consider an image described as “A house and some trees and a mountain.” Is the house on the mountain or in front of it? Are the trees in front of or behind the house, or maybe on the mountain in the distance?

In order to adequately describe the image, these details should be filled in, even if the general idea can be gotten across with fewer words. If a sighted person wants more detail they can look closer or click the image for a bigger version — someone who can’t do that now has a similar option with this “generate detailed image description” command. (Activate it with a long press in the Android app or a custom action in iOS.)

Perhaps the new description would be something like “A house and some trees in front of a mountain with snow on it.” That paints a better picture, right? (To be clear, these examples are made up, but it’s the sort of improvement that’s expected.)

The new detailed description feature will come to Facebook first for testing, though the improved vocabulary will appear on Instagram soon. The descriptions are also kept simple so they can be easily translated to other languages already supported by the apps, though the feature may not roll out in other countries simultaneously.

from Social – TechCrunch https://ift.tt/2NlsBlO

No comments:

Post a Comment